20 years after Deep Blue defeated the World Champion at Chess, Alpha Go did the same for the World Champion at Go. What are the key changes that make it so much better?

Deep Blue

Excerpts from: https://en.wikipedia.org/wiki/Deep_Blue_(chess_computer)

The system derived its playing strength mainly from brute force computing power. It was a massively parallel, RS/6000 SP Thin P2SC-based system with 30 nodes, with each node containing a 120 MHz P2SC microprocessor, enhanced with 480 special purpose VLSI chess chips.

To be fair, it’s not just “brute force computing” it does Alpha-beta pruning with some neat heuristics programmed by the team – “Deep Blue employed custom VLSI chips to execute the alpha-beta search algorithm in parallel, an example of GOFAI (Good Old-Fashioned Artificial Intelligence) rather than of deep learning which would come a decade later. It was a brute force approach, and one of its developers even denied that it was artificial intelligence at all”

Here’s the ground-breaking (back in the day) paper for Deep Blue – https://core.ac.uk/download/pdf/82416379.pdf

Excerpts from: https://www.scientificamerican.com/article/20-years-after-deep-blue-how-ai-has-advanced-since-conquering-chess/

Humans have been studying chess openings for centuries and developed their own favorite [moves]. The grand masters helped us choose a bunch of those to program into Deep Blue.

How did Deep Blue advance from 1996 to 1997 in order to beat Kasparov?

We did a couple of things. We more or less doubled the speed of the system by creating a new generation of hardware. And then we increased the chess knowledge of the system by adding features to the chess chip that enabled it to recognize different positions and made it more aware of chess concepts. Those chips could then search through a tree of possibilities to figure out the best move in a position. Part of the improvement between ‘96 and ‘97 is we detected more patterns in a chess position and could put values on them and therefore evaluate chess positions more accurately. The 1997 version of Deep Blue searched between 100 million and 200 million positions per second, depending on the type of position. The system could search to a depth of between six and eight pairs of moves—one white, one black—to a maximum of 20 or even more pairs in some situations.

Excerpts from: https://en.wikipedia.org/wiki/Deep_Blue_(chess_computer)

Deep Blue’s evaluation function was initially written in a generalized form, with many to-be-determined parameters (e.g. how important is a safe king position compared to a space advantage in the center, etc.). The optimal values for these parameters were then determined by the system itself, by analyzing thousands of master games. The evaluation function had been split into 8,000 parts, many of them designed for special positions. In the opening book there were over 4,000 positions and 700,000 grandmaster games. The endgame database contained many six-piece endgames and five or fewer piece positions. Before the second match, the chess knowledge of the program was fine-tuned by grandmaster Joel Benjamin. The opening library was provided by grandmasters Miguel Illescas, John Fedorowicz, and Nick de Firmian.

AlphaGo Zero

Courtesy of:

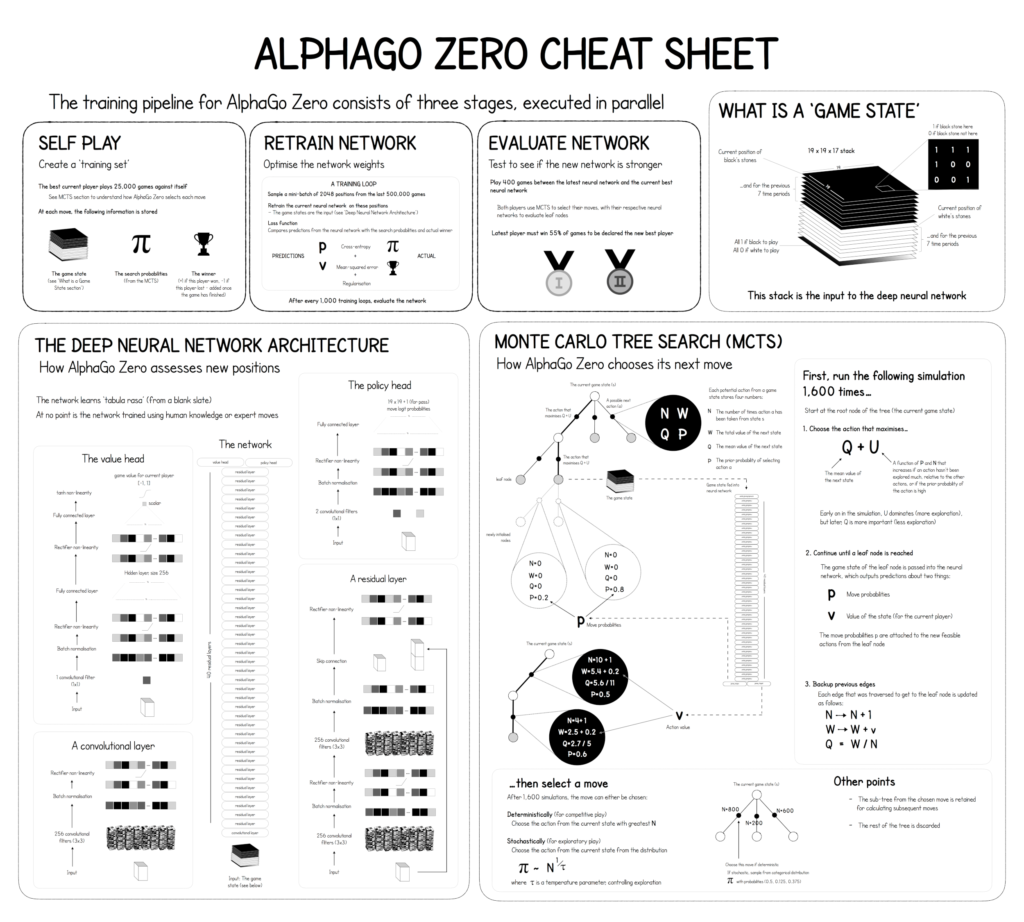

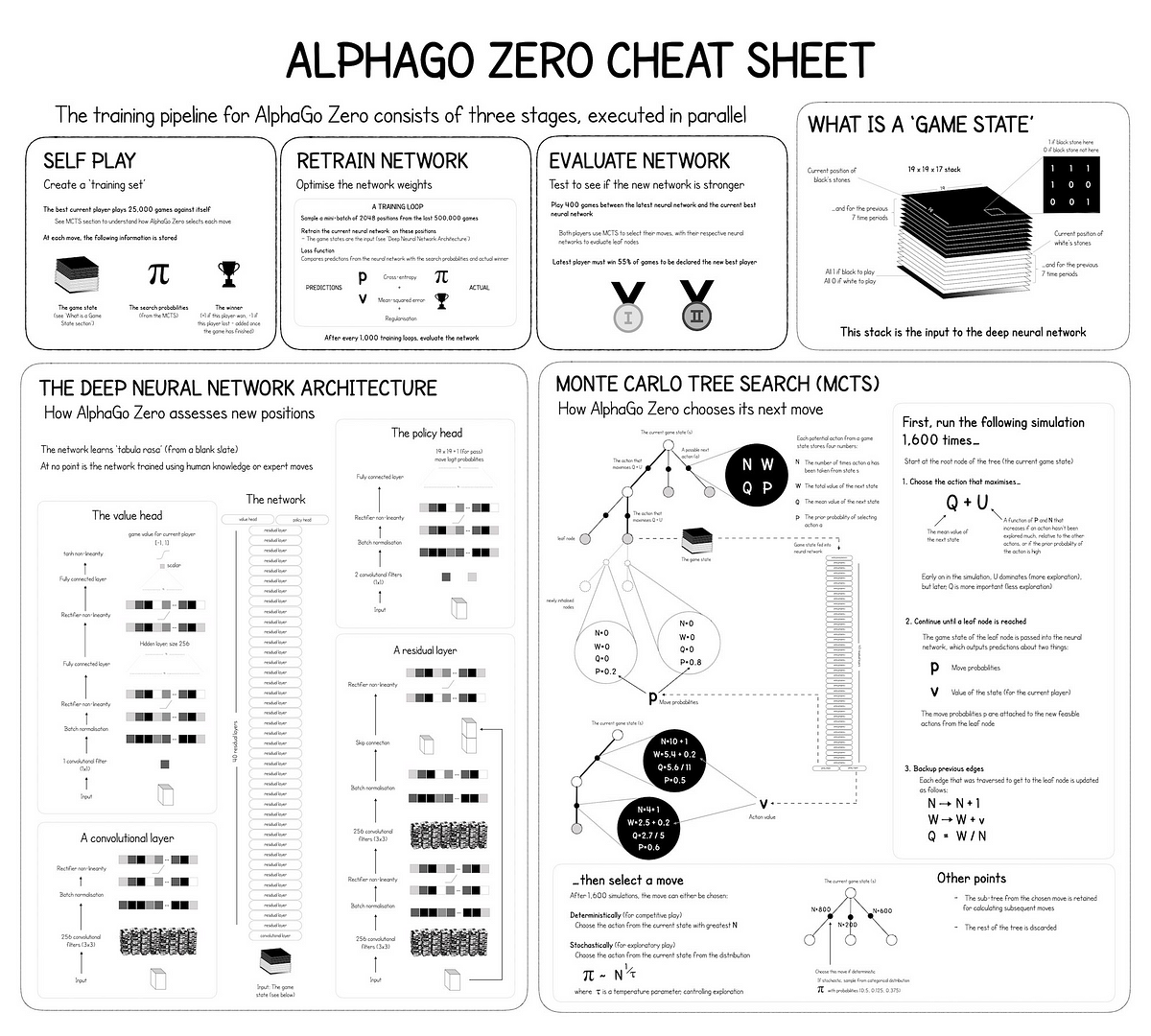

The paper that the cheat sheet is based on was published in Nature and is available here.

Some key assertions of the paper:

Here we introduce an algorithm based solely on reinforcement learning, without human data, guidance or domain knowledge beyond game rules.

Starting tabula rasa, our new program AlphaGo Zero achieved superhuman performance, winning 100–0 against the previously published, champion-defeating AlphaGo.

Our new method uses a deep neural network fθ with parameters θ. This neural network takes as an input the raw board representation s of the position and its history, and outputs both move probabilities and a value, (p, v) =fθ(s). The vector of move probabilities p represents the probability of selecting each move a (including pass)

Finally, it uses a simpler tree search that relies upon this single neural network to evaluate positions and sample moves, without performing any Monte Carlo rollouts. To achieve these results, we introduce a new reinforcement learning algorithm that incorporates lookahead search inside the training loop, resulting in rapid improvement and precise and stable learning.

Analysis

DeepMind’s AlphaZero replaces the simulation step with an evaluation based on a neural network. – https://en.wikipedia.org/wiki/Monte_Carlo_tree_search

Effectively, rather than scoring using man-crafted heuristics (i.e. human gameplay experience), AlphaGo encapsulates game playing “experience” in the neural network. This effectively means that AlphaGo learns its own evaluation heuristic function.

The neural network:

- Intuitively predicts the next best move based on the state of the game board.

- Learns that intuition by playing many games with itself without human intervention.

- Reduced the need for calculating ~200 million moves a second for an average of 170 seconds (average of 34 billion moves per move) to 1600 moves in ~0.4 seconds.

AlphaGo Zero took a few days to learn its “heuristic” function from tabula rasa in contrast to Deep Blue that had a database of chess moves from Grandmasters over the years.

Appendix

Deep Blue versus Garry Kasparov – Game 6 Log as released by IBM: