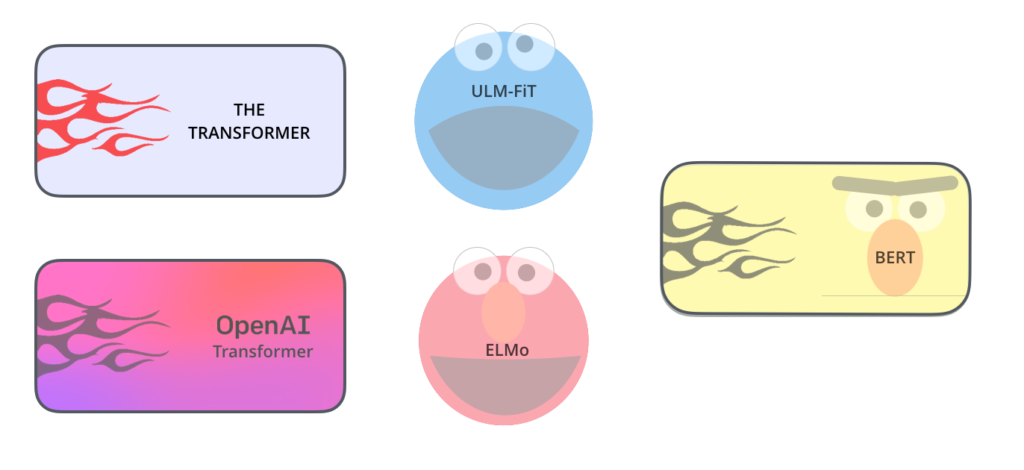

The post above examines current state-of-the-art (SOTA) models namely:

- ELMo

- USE (Universal Sentence Encoder)

- BERT

- XLNet

It goes on to introduce different methods to evaluate those models based on the task at hand.

A little explanation of why the models are different is also given.

They did not state which version of USE was used – there are two versions:

- Deep Averaging Network (USE-DAN)

- Transformer (USE-T)

The former being less accurate but more performant on longer sentences.

Another thing to note is that ELMo while being contextual is not deeply contextual as declared by the people that created BERT. Obviously BERT is.

Also missing from the action is OpenAI’s GPT-2, I would have liked it if it was included.

There is some buzz about XLNet, but I have not read enough about it to comment other than it promises the ability to learn longer-term dependencies in text. However, given that transformer models compute cost grows quadratically to input text length, I am curious how they handled that.

Other takeaways:

…without specific fine-tuning, it seems that BERT is not suited to finding similar sentences.

…USE is trained on a number of tasks but one of the main tasks is to identify the similarity between pairs of sentences. The authors note that the task was to identify “semantic textual similarity (STS) between sentence pairs scored by Pearson correlation with human judgments”. This would help explain why the USE is better at the similarity task.

Pre-trained models are your friend: Most of the models published now are capable of being fine-tuned, but you should use the pre-trained model to get a quick idea of its suitability.